Hive外表演练

Textfile(hive建表默认文件类型)

Hive

CREATE TABLE test

(

siteid int,

citycode int,

username string,

pv int)

ROW FORMAT SERDE

‘org.apache.hadoop.hive.serde2.lazy.LazySimpleSerDe’

WITH SERDEPROPERTIES (

‘field.delim’=’,’,

‘serialization.format’=’,’)

STORED AS INPUTFORMAT

‘org.apache.hadoop.mapred.TextInputFormat’

OUTPUTFORMAT

‘org.apache.hadoop.hive.ql.io.HiveIgnoreKeyTextOutputFormat’

LOCATION

‘hdfs://xxxx:9002/user/hive/warehouse/test.db/test’

TBLPROPERTIES (

‘transient_lastDdlTime’=‘1634021246’)

StarRocks

-- 创建一个名为hive0的Hive资源

CREATE EXTERNAL RESOURCE "hive0"

PROPERTIES (

"type" = "hive",

"hive.metastore.uris" = "thrift://xxxx:9082"

);

-- 查看StarRocks中创建的资源

SHOW RESOURCES;

-- 删除名为hive0的资源

DROP RESOURCE "hive0";

-- 创建外表

CREATE EXTERNAL TABLE hive_test(

siteid

int,

citycode int,

username string,

pv int

) ENGINE=HIVE

PROPERTIES (

“resource” = “hive0”,

“database” = “test”,

“table” = “test”

);

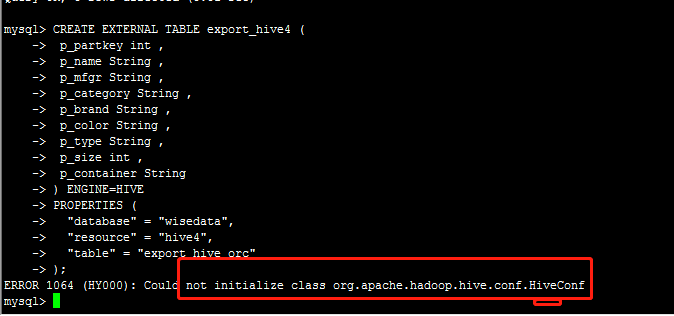

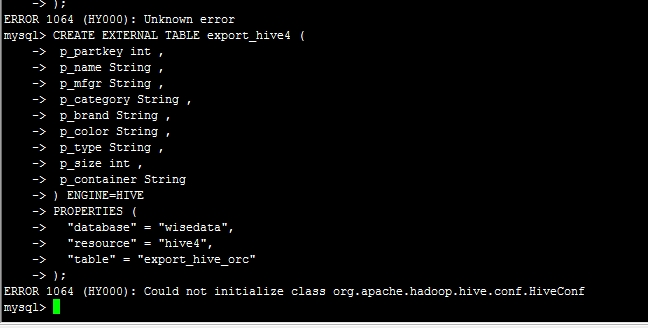

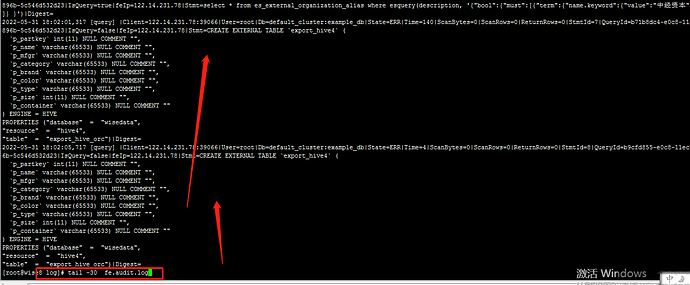

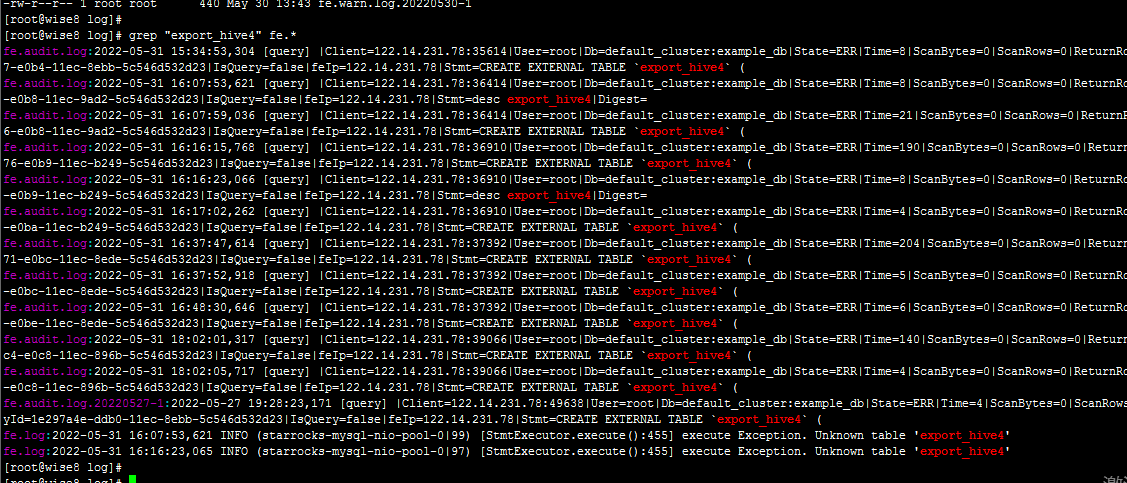

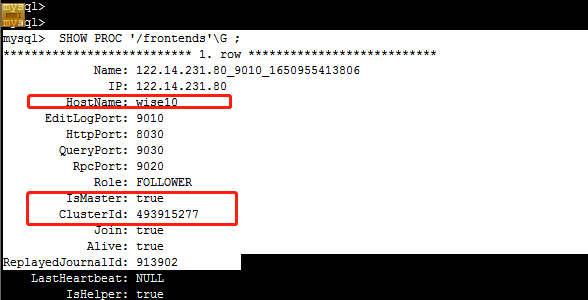

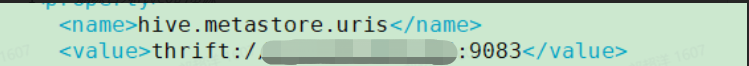

创建资源成功,创建外表失败报错如下:

get current notification event id failed: java.net.SocketException: Broken pipe (Write failed)

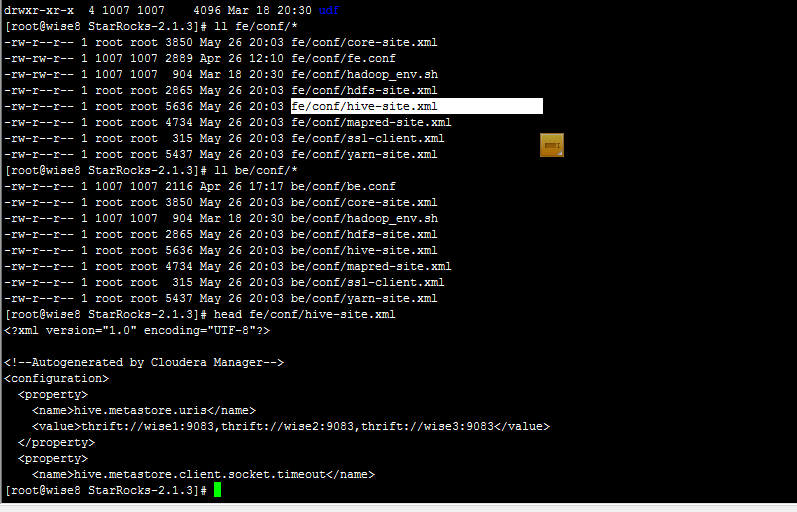

确认资源中hive.metastore.uris是否正确。

查看hive配置文件

cat /home/disk1/sr/app/apache-hive-2.3.7-bin/conf/hive-site.xml

端口错误,更改信息如下:

-- 删除名为hive0的资源

DROP RESOURCE "hive0";

-- 创建一个名为hive0的Hive资源

CREATE EXTERNAL RESOURCE "hive0"

PROPERTIES (

"type" = "hive",

"hive.metastore.uris" = "thrift://xxxx:9083"

);

查询外表报错:

mysql> select * from hive_test;

ERROR 1064 (HY000): get partition detail failed: org.apache.doris.common.DdlException: get hive partition meta data failed: unsupported file format [org.apache.hadoop.mapred.TextInputFormat]

不支持text文件类型外表。(默认textfile格式)

orc格式

Hive

## 建表

CREATE TABLE test

_orc(

siteid int,

citycode int,

username string,

pv int)

row format delimited fields terminated by ‘\t’

stored as orc

导入数据

insert into test_orc select siteid , citycode , username , pv from test;

show create table test_orc

CREATE TABLE test_orc(

siteid int,

citycode int,

username string,

pv int)

ROW FORMAT SERDE

‘org.apache.hadoop.hive.ql.io.orc.OrcSerde’

WITH SERDEPROPERTIES (

‘field.delim’=’\t’,

‘serialization.format’=’\t’)

STORED AS INPUTFORMAT

‘org.apache.hadoop.hive.ql.io.orc.OrcInputFormat’

OUTPUTFORMAT

‘org.apache.hadoop.hive.ql.io.orc.OrcOutputFormat’

LOCATION

‘hdfs://xxxx:9002/user/hive/warehouse/test.db/test_orc’

TBLPROPERTIES (

‘transient_lastDdlTime’=‘1634731190’)

StarRocks查询外表

## 创建hive外表

CREATE EXTERNAL TABLE hive_test_orc(

siteid

int,

citycode int,

username string,

pv int

) ENGINE=HIVE

PROPERTIES (

“resource” = “hive0”,

“database” = “test”,

“table” = “test_orc”

);

查询外表

select * from hive_test_orc;

Hive更新数据:

## 插入数据

insert into test_orc select siteid , citycode , username , pv from test;

## 查询数据

hive> select * from test_orc;

OK

777 100 基本 1

778 101 概念 1

779 102 测试 1

780 103 spark 1

781 104 load 1

782 105 honest 1

777 100 基本 1

778 101 概念 1

779 102 测试 1

780 103 spark 1

781 104 load 1

782 105 honest 1

Time taken: 0.086 seconds, Fetched: 12 row(s)

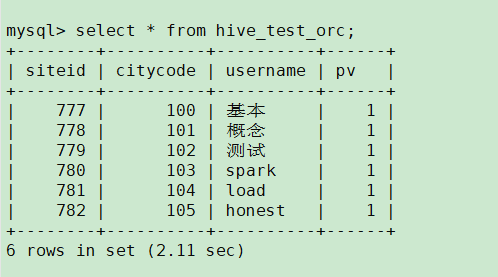

StarRocks查询数据

mysql> select * from hive_test_orc;

+--------+----------+----------+------+

| siteid | citycode | username | pv |

+--------+----------+----------+------+

| 777 | 100 | 基本 | 1 |

| 778 | 101 | 概念 | 1 |

| 779 | 102 | 测试 | 1 |

| 780 | 103 | spark | 1 |

| 781 | 104 | load | 1 |

| 782 | 105 | honest | 1 |

+--------+----------+----------+------+

6 rows in set (0.01 sec)

数据不同步。

refresh

mysql> REFRESH EXTERNAL TABLE hive_test_orc;

Query OK, 0 rows affected (1.31 sec)

mysql> select * from hive_test_orc;

+--------+----------+----------+------+

| siteid | citycode | username | pv |

+--------+----------+----------+------+

| 777 | 100 | 基本 | 1 |

| 778 | 101 | 概念 | 1 |

| 779 | 102 | 测试 | 1 |

| 780 | 103 | spark | 1 |

| 781 | 104 | load | 1 |

| 782 | 105 | honest | 1 |

| 777 | 100 | 基本 | 1 |

| 778 | 101 | 概念 | 1 |

| 779 | 102 | 测试 | 1 |

| 780 | 103 | spark | 1 |

| 781 | 104 | load | 1 |

| 782 | 105 | honest | 1 |

+--------+----------+----------+------+

12 rows in set (1.03 sec)

Parquet格式

Hive

## 建表

CREATE TABLE test

_parquet(

siteid int,

citycode int,

username string,

pv int)

stored as parquet

导入数据

insert into test_parquet select siteid , citycode , username , pv from test;

show create table test_orc

CREATE TABLE test_parquet(

siteid int,

citycode int,

username string,

pv int)

ROW FORMAT SERDE

‘org.apache.hadoop.hive.ql.io.parquet.serde.ParquetHiveSerDe’

STORED AS INPUTFORMAT

‘org.apache.hadoop.hive.ql.io.parquet.MapredParquetInputFormat’

OUTPUTFORMAT

‘org.apache.hadoop.hive.ql.io.parquet.MapredParquetOutputFormat’

LOCATION

‘hdfs://xxxx:9002/user/hive/warehouse/test.db/test_parquet’

TBLPROPERTIES (

‘transient_lastDdlTime’=‘1634797148’)

StarRocks查询外表

## 创建hive外表

CREATE EXTERNAL TABLE hive_test_parquet(

siteid

int,

citycode int,

username string,

pv int

) ENGINE=HIVE

PROPERTIES (

“resource” = “hive0”,

“database” = “test”,

“table” = “test_parquet”

);

查询外表

mysql> select * from hive_test_parquet;

±-------±---------±---------±-----+

| siteid | citycode | username | pv |

±-------±---------±---------±-----+

| 777 | 100 | 基本 | 1 |

| 778 | 101 | 概念 | 1 |

| 779 | 102 | 测试 | 1 |

| 780 | 103 | spark | 1 |

| 781 | 104 | load | 1 |

| 782 | 105 | honest | 1 |

±-------±---------±---------±-----+

6 rows in set (0.04 sec)

Schame change

add columns

Hive

hive> alter table test_orc add columns (name1 varchar(10));

OK

Time taken: 0.055 seconds

hive> select * from test_orc;

OK

777 100 基本 1 NULL

778 101 概念 1 NULL

779 102 测试 1 NULL

780 103 spark 1 NULL

781 104 load 1 NULL

782 105 honest 1 NULL

777 100 基本 1 NULL

778 101 概念 1 NULL

779 102 测试 1 NULL

780 103 spark 1 NULL

781 104 load 1 NULL

782 105 honest 1 NULL

Time taken: 0.079 seconds, Fetched: 12 row(s)

StarRocks 查询:

mysql> select * from hive_test_orc;

+--------+----------+----------+------+

| siteid | citycode | username | pv |

+--------+----------+----------+------+

| 777 | 100 | 基本 | 1 |

| 778 | 101 | 概念 | 1 |

| 779 | 102 | 测试 | 1 |

| 780 | 103 | spark | 1 |

| 781 | 104 | load | 1 |

| 782 | 105 | honest | 1 |

| 777 | 100 | 基本 | 1 |

| 778 | 101 | 概念 | 1 |

| 779 | 102 | 测试 | 1 |

| 780 | 103 | spark | 1 |

| 781 | 104 | load | 1 |

| 782 | 105 | honest | 1 |

+--------+----------+----------+------+

12 rows in set (0.01 sec)

refresh

## refresh 外表

REFRESH EXTERNAL TABLE hive_test_orc;

## 查询外表

mysql> select * from hive_test_orc;

+--------+----------+----------+------+

| siteid | citycode | username | pv |

+--------+----------+----------+------+

| 777 | 100 | 基本 | 1 |

| 778 | 101 | 概念 | 1 |

| 779 | 102 | 测试 | 1 |

| 780 | 103 | spark | 1 |

| 781 | 104 | load | 1 |

| 782 | 105 | honest | 1 |

| 777 | 100 | 基本 | 1 |

| 778 | 101 | 概念 | 1 |

| 779 | 102 | 测试 | 1 |

| 780 | 103 | spark | 1 |

| 781 | 104 | load | 1 |

| 782 | 105 | honest | 1 |

+--------+----------+----------+------+

12 rows in set (0.03 sec)

refresh不生效,可以正常查询,但是要看见新增列需要重新创建外表。

Delete columns

Hive

## 删除pv列

hive> alter table test_parquet replace columns(

> siteid int,

> citycode int,

> username string

> );

OK

Time taken: 0.046 seconds

## 查询hive数据

hive> select * from test_parquet;

OK

777 100 基本

778 101 概念

779 102 测试

780 103 spark

781 104 load

782 105 honest

Time taken: 0.077 seconds, Fetched: 6 row(s)

StarRocks 查询:

## 查询数据

mysql> select * from hive_test_parquet;

+--------+----------+----------+------+

| siteid | citycode | username | pv |

+--------+----------+----------+------+

| 777 | 100 | 基本 | 1 |

| 778 | 101 | 概念 | 1 |

| 779 | 102 | 测试 | 1 |

| 780 | 103 | spark | 1 |

| 781 | 104 | load | 1 |

| 782 | 105 | honest | 1 |

+--------+----------+----------+------+

6 rows in set (0.00 sec)

## refresh外表

REFRESH EXTERNAL TABLE hive_test_parquet;

## 查询数据

mysql> select * from hive_test_parquet;

+--------+----------+----------+------+

| siteid | citycode | username | pv |

+--------+----------+----------+------+

| 777 | 100 | 基本 | 1 |

| 778 | 101 | 概念 | 1 |

| 779 | 102 | 测试 | 1 |

| 780 | 103 | spark | 1 |

| 781 | 104 | load | 1 |

| 782 | 105 | honest | 1 |

+--------+----------+----------+------+

6 rows in set (0.01 sec)

refresh不生效,需要重新建立外表

Hive导入新数据

## 导入数据

insert into test_parquet select siteid

,

citycode

,

username

from test;

查询hive数据

hive> select * from test_parquet;

OK

777 100 基本

778 101 概念

779 102 测试

780 103 spark

781 104 load

782 105 honest

777 100 基本

778 101 概念

779 102 测试

780 103 spark

781 104 load

782 105 honest

Time taken: 0.087 seconds, Fetched: 12 row(s)

StarRocks 查询:

## refresh外表

REFRESH EXTERNAL TABLE hive_test_parquet;

## 查询数据

mysql> select * from hive_test_parquet;

+--------+----------+----------+------+

| siteid | citycode | username | pv |

+--------+----------+----------+------+

| 777 | 100 | 基本 | 1 |

| 778 | 101 | 概念 | 1 |

| 779 | 102 | 测试 | 1 |

| 780 | 103 | spark | 1 |

| 781 | 104 | load | 1 |

| 782 | 105 | honest | 1 |

| 777 | 100 | 基本 | NULL |

| 778 | 101 | 概念 | NULL |

| 779 | 102 | 测试 | NULL |

| 780 | 103 | spark | NULL |

| 781 | 104 | load | NULL |

| 782 | 105 | honest | NULL |

+--------+----------+----------+------+

12 rows in set (0.02 sec)

可以查询,pv列为空,没报错。