现在我们是一个FE接口,2个BE 接口,今天flink同步任务时有三个任务失败了,都提示如下错误:com.starrocks.connector.flink.manager.StarRocksStreamLoadFailedException: Failed to flush data to StarRocks, Error response:

{“Status”:“Fail”,“BeginTxnTimeMs”:0,“Message”:“tablet 22051 has few replicas: 1, quorum: 2, cluster: 189335494”,“NumberUnselectedRows”:0,“CommitAndPublishTimeMs”:0,“Label”:“d23016b0-ba37-4402-a1a6-1092f928998c”,“LoadBytes”:0,“StreamLoadPutTimeMs”:0,“NumberTotalRows”:0,“WriteDataTimeMs”:0,“TxnId”:87352,“LoadTimeMs”:0,“ReadDataTimeMs”:0,“NumberLoadedRows”:0,“NumberFilteredRows”:0}

{}

at com.starrocks.connector.flink.manager.StarRocksStreamLoadVisitor.doStreamLoad(StarRocksStreamLoadVisitor.java:104)

at com.starrocks.connector.flink.manager.StarRocksSinkManager.asyncFlush(StarRocksSinkManager.java:324)

at com.starrocks.connector.flink.manager.StarRocksSinkManager.lambda$startAsyncFlushing$0(StarRocksSinkManager.java:161)

at java.lang.Thread.run(Thread.java:748)

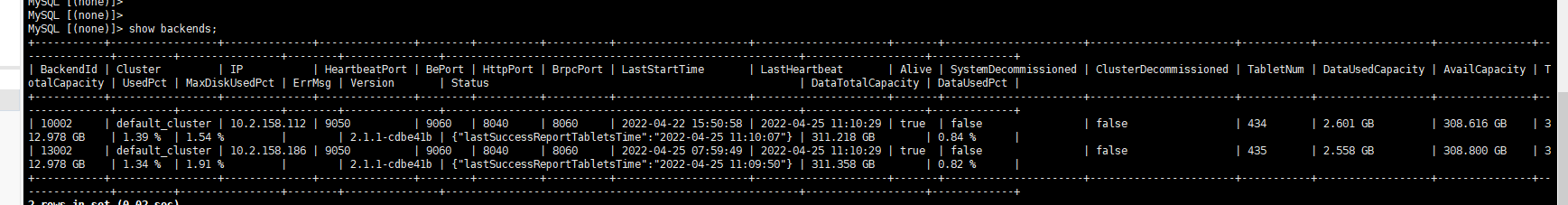

请问下 这种错误怎么解决?